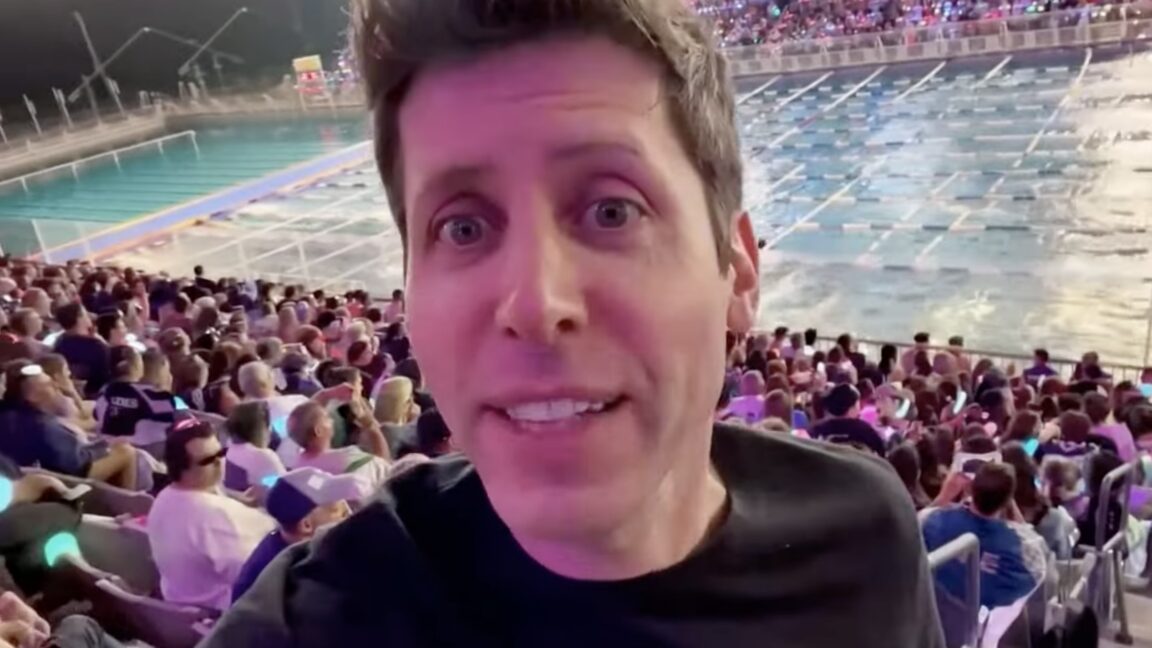

"On Tuesday, OpenAI announced Sora 2, its second-generation video-synthesis AI model that can now generate videos in various styles with synchronized dialogue and sound effects, which is a first for the company. OpenAI also launched a new iOS social app that allows users to insert themselves into AI-generated videos through what OpenAI calls "cameos." OpenAI showcased the new model in an AI-generated video that features a photorealistic version of OpenAI CEO Sam Altman talking to the camera in a slightly unnatural-sounding voice amid fantastical backdrops,"

"Regarding that voice, the new model can create what OpenAI calls "sophisticated background soundscapes, speech, and sound effects with a high degree of realism." In May, Google's Veo 3 became the first video-synthesis model from a major AI lab to generate synchronized audio as well as video. Just a few days ago, Alibaba released Wan 2.5, an open-weights video model that can generate audio as well. Now OpenAI has joined the audio party with Sora 2."

"The model also features notable visual consistency improvements over OpenAI's previous video model, and it can also follow more complex instructions across multiple shots while maintaining coherency between them. The new model represents what OpenAI describes as its "GPT-3.5 moment for video," comparing it to the ChatGPT breakthrough during the evolution of its text-generation models over time. Sora 2 appears to demonstrate improved physical accuracy over the original Sora model from February 2024,"

Sora 2 generates videos in multiple styles with synchronized dialogue and realistic sound effects, marking the company's first model to combine video and synchronized audio. The launch is accompanied by an iOS social app that enables users to insert themselves into generated videos as "cameos." Demonstrations include a photorealistic Sam Altman talking amid fantastical backdrops supported by sophisticated background soundscapes. Sora 2 improves visual consistency, follows complex multi-shot instructions while maintaining coherency, and claims enhanced physical accuracy capable of simulating Olympic gymnastics and triple axels with realistic physics. Competitors with audio-capable video models include Google's Veo 3 and Alibaba's Wan 2.5.

Read at Ars Technica

Unable to calculate read time

Collection

[

|

...

]