"Controlling gradient recording in TensorFlow enables users to specify which variables are monitored by the GradientTape, enhancing efficiency during complex operations."

"Using tf.GradientTape.stop_recording is crucial for temporarily suspending gradient tracking, allowing computations that don't require differentiation while optimizing performance."

"The introduction of higher-order gradients and custom gradients in SavedModel is essential for advanced users looking to leverage TensorFlow's full capabilities in automatic differentiation."

"Multiple tapes can be utilized within TensorFlow to compute gradients through various paths, facilitating complex gradient computations necessary for deep learning applications."

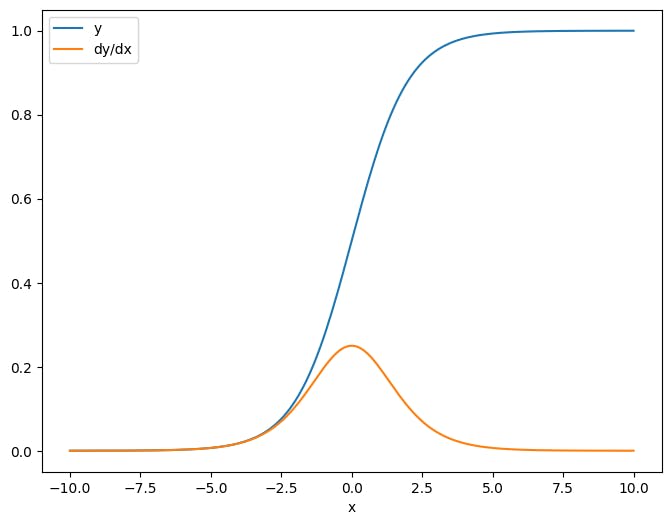

The guide on gradients and automatic differentiation covers all necessary aspects for calculating gradients in TensorFlow. It explores advanced features of the tf.GradientTape API, including stopping and resetting recordings, and implementing custom gradients. Users can control which variables are monitored, apply multiple tapes for distinct gradient calcualtions, and leverage higher-order gradients and Jacobians. The guide enhances efficiency by allowing temporary suspension of gradient recording to execute operations that don't require differentiation, thereby improving computational performance.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]