#gpt-52

#gpt-52

[ follow ]

#ai-competition #openai #enterprise-ai #ai-benchmarks #latex #chatgpt #ai-assisted-research #model-variants

fromBusiness Insider

5 days agoOpenAI is retiring its popular 'yes man' version of ChatGPT again - and this time it may be for good

OpenAI is sending everyone's favourite "yes man" version of ChatGPT back into retirement. In a blog post on Thursday, the company said it would sunset GPT-4o alongside GPT‑4.1, GPT‑4.1 mini, and OpenAI o4-mini on February 13. OpenAI gave GPT-4o a special mention in its announcement after many users became attached to its "conversational style and warmth" last year, which prompted the company to reinstate it following user backlash in August.

Artificial intelligence

Artificial intelligence

fromZDNET

1 week agoMeet Prism, OpenAI's free research workspace for scientists - how to try it

Prism is a free, GPT-5.2–powered collaborative AI workspace that streamlines drafting, revision, collaboration, and LaTeX-native preparation for scientific research without replacing human leadership.

fromComputerworld

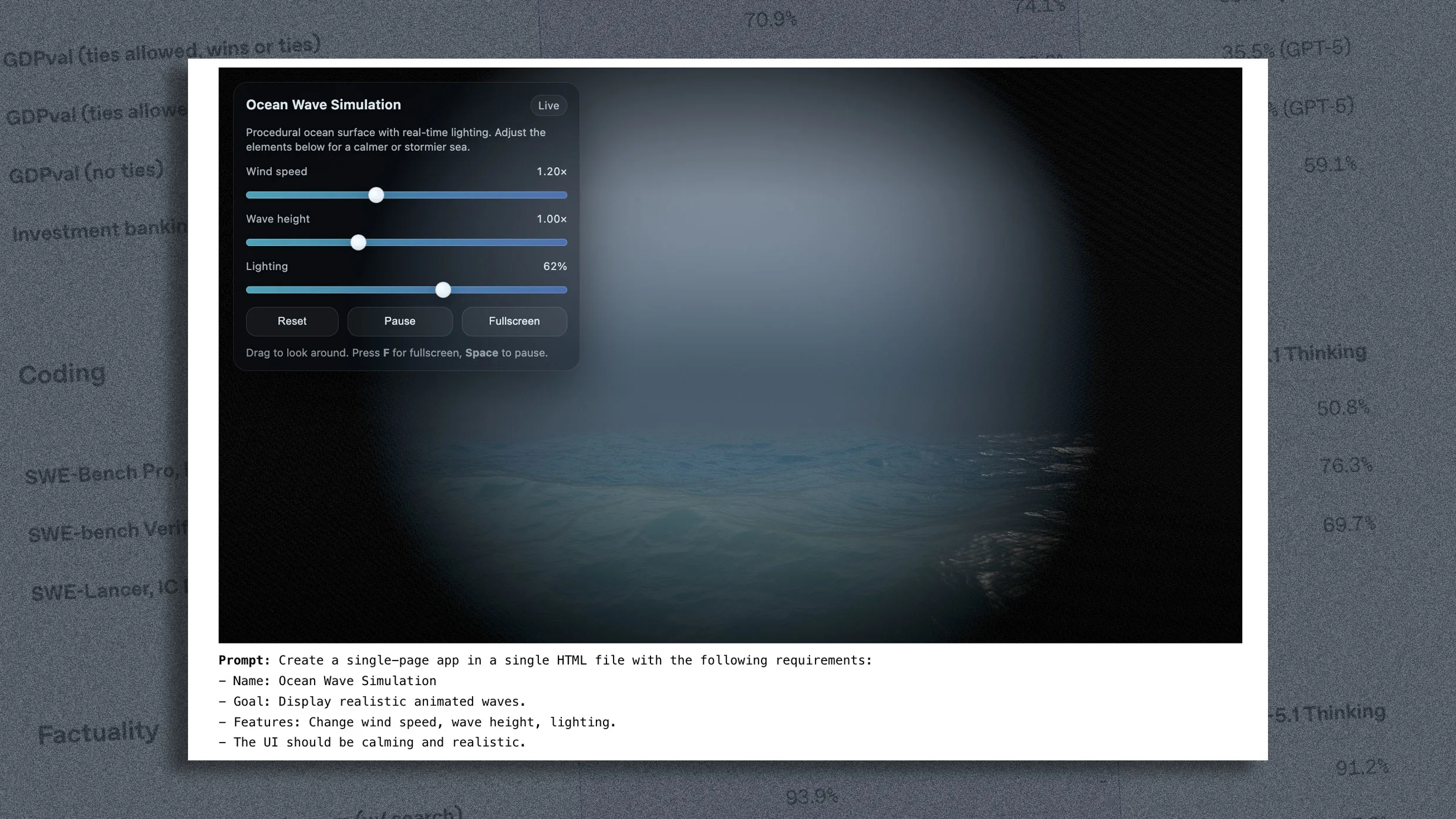

1 month agoOpenAI launches GPT-5.2 as it battles Google's Gemini 3 for AI model supremacy

The new model, available in Instant, Thinking, and Pro performance tiers, offers major improvements across a range of benchmarks, the company said. Using OpenAI's GDPval benchmark, which compares the model's ability to complete 44 different business tasks to the same standards as human experts, GPT-5.2 matched or exceeded human users in 70.9% of tests, compared to GPT-5.1's 38.8% across the Instant (basic), Thinking (deeper reasoning), and Pro (research-grade) versions.

Artificial intelligence

fromZDNET

1 month agoI tested GPT-5.2 and the AI model's mixed results raise tough questions

Since the generative AI boom began in 2023, I've run a series of repeatable tests on new products and releases. ZDNET regularly tests the programming ability of chatbots, their overall performance, and how various AI content detectors perform. Also: Gemini vs. Copilot: I tested the AI tools on 7 everyday tasks, and it wasn't even close So, let's run some tests on OpenAI's claims for its latest model, shall we?

Artificial intelligence

Tech industry

fromZDNET

1 month agoAre GPT-5.2's new powers enough to surpass Gemini 3? Try it and see

GPT-5.2 is a high-capability AI model optimized for professional knowledge work, boosting productivity in spreadsheets, presentations, coding, image perception, long contexts, and multi-step projects.

[ Load more ]