fromInfoQ

1 week agoEnhancing A/B Testing at DoorDash with Multi-Armed Bandits

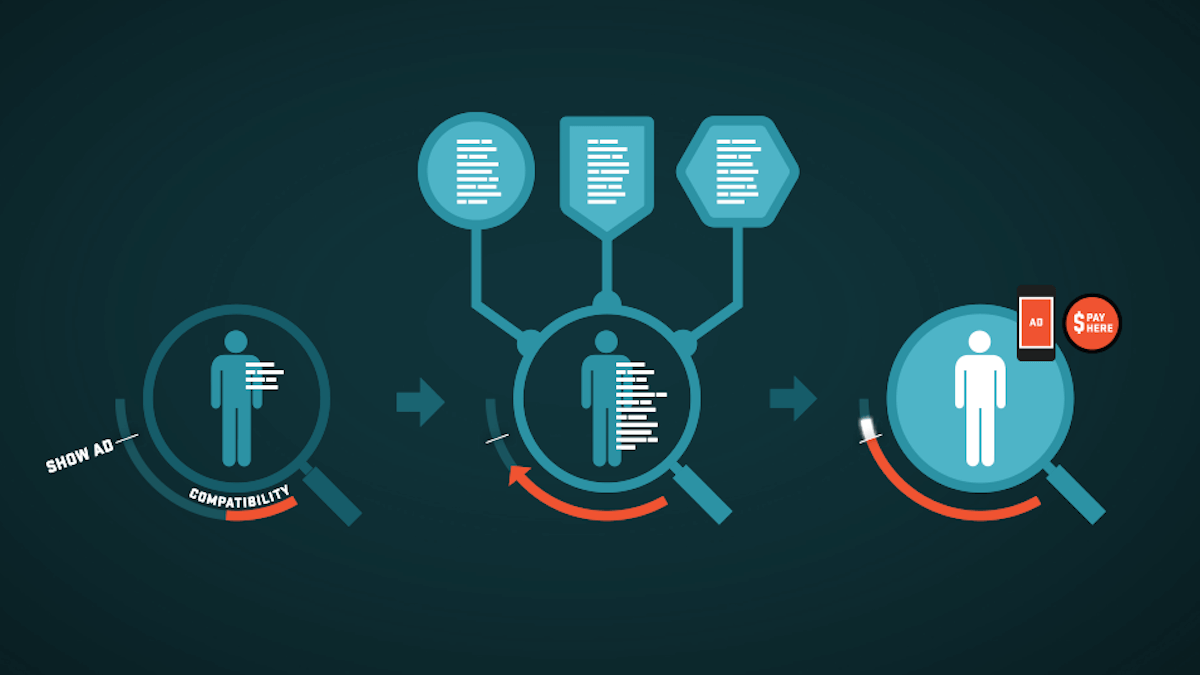

While experimentation is essential, traditional A/B testing can be excessively slow and expensive, according to DoorDash engineers Caixia Huang and Alex Weinstein. To address these limitations, they adopted a "multi-armed bandits" (MAB) approach to optimize their experiments. When running experiments, organizations aim to minimize the opportunity cost, or regret, caused by serving the less effective variants to a subset of the user base.